How to "get" tech

3 key steps your bank must take to survive

- |

- Written by Quintin Sykes, Cornerstone Advisors

Bad tech can sink a bank like bad loans. It just takes longer. Here's how to stay afloat.

Bad tech can sink a bank like bad loans. It just takes longer. Here's how to stay afloat.

When people mull over “cutting the cable” and switching to streaming or slinging their home entertainment, they tend to fall into two camps: those who understand the new technologies (or trust their tech-savvy offspring) and those who haven’t a clue and just stick with what they have.

In business, there is a similar technology “comfort” divide, and banks are no exception. There is one key difference between the consumer and business worlds, however. At home, it doesn’t matter too much, relatively speaking, if you just stick with cable. In business, increasingly, the ability to effectively assimilate new technology is mission critical, and perhaps even existential.

This article takes a top-down look at what differentiates tech embracers from tech avoiders in banking with the hope that some in the latter camp, of which there are many, can find a way before they fall too far behind.

While many banks settle for “me, too” delivery experiences, some have chosen a different path. Leaders in these institutions “get” technology, understand the possibilities of deploying it (as well as the limitations), and apply it for the benefit of customers. There is an added benefit to this: Building processes and a foundation to leverage technology to improve customer experience enables bank employees to perform their jobs more efficiently.

Bank leaders who understand how to leverage technology apply it in a variety of ways. This article focuses on three applications in particular:

• Establishing a strategic data culture and leveraging data for revenue growth.

• Identifying and integrating technology partners into the bank environment, leveraging a vendor performance management mind-set.

• Using technology as an enabler of well-designed processes for the benefit of external and internal customers.

Strategic data culture

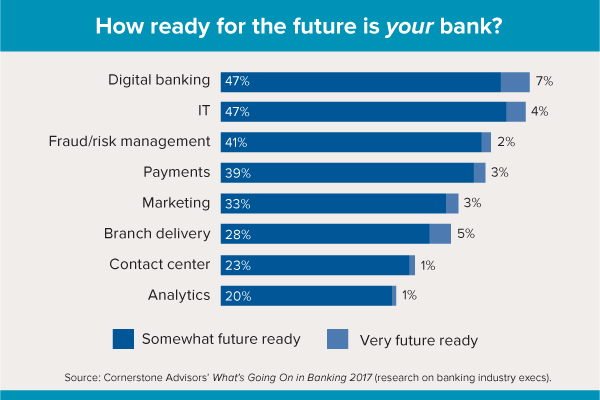

Cornerstone Advisors describes a future-ready analytics environment as one where a bank can use analytics as a competitive differentiator. In a recent Cornerstone survey of more than 300 financial institution executives, only 23% of respondents felt that their institutions were “very” or “somewhat” future ready.

Most financial institutions have not developed an approach to effectively manage data as an asset. The data remains “locked up” in core systems and application silos. These banks do have a basic set of information used for financial, management, and regulatory reporting, but they are unable to leverage that data for customer acquisition and retention.

When banks adopt a strategic data culture and acquire and implement specialized modeling and quantitative analysis skills as well as tools to enable predictive analytics, their overall data culture will mature. Examples of successful deployment of analytics for revenue generation in banks that we have seen include building on investments in analytics for fraud and risk performance into targeted campaigns to improve credit card cross sell and utilization; and building on investments to achieve a single, unified customer view by incorporating external demographic and credit report data to enrich profiles for use in cross sell.

Limitations of early analytics

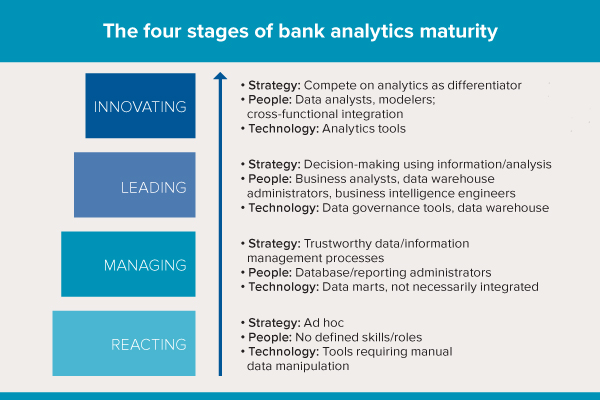

As indicated, a minority of banks can lay claim to having a data culture. Most have data managed and leveraged in silos and on an ad hoc basis. Banks without a data culture are typically in early states of analytics maturity. Cornerstone identifies four maturity states that a financial institution may find itself in. From least to most mature, they are Reacting, Managing, Leading, and Innovating.

Financial institutions in the Reacting state spend significant time massaging data by hand. Options are limited to a few specialized users and the IT department, and tools used to “munge” (transform, combine, cleanup) data commonly include Excel, Access, and Monarch. This manual effort not only creates inefficiency but increases the likelihood of error and multiple versions of the truth. Skill sets around governing and managing data are limited to report writers and database administrators.

The primary uses of data in most financial institutions remain in the areas of compliance and risk management—the use of marketing solutions, such as a Marketing Customer Information File, notwithstanding. Extensive time is spent on data cleanup after-the-fact versus at the point of creation, as no governance processes are formalized that ensure ownership of data and data quality. So the reporting produced is often questioned as there is no trust in the results provided.

Path to strategic data culture

The best data warehouse, analytics, and visualization solutions alone do not enable banks to successfully integrate data analytics. Banks must start with a vision for use of data analytics within the company—including organization, and processes—before moving on to tools. This is where culture comes into play versus just approving another project on the IT steering committee agenda. Leaders that get the value of analytics start with engaging business units across the bank in development and execution of the vision, ensuring sound governance and data management processes are in place, and acquiring and developing the necessary skill sets.

Once the vision is agreed upon and communicated, bankers must formalize governance processes that establish ownership of data (e.g., “data stewards”), encourage collaboration across business units (e.g., set up “data domain stewards”), and provide oversight at a strategic level (e.g., establish a “data governance committee”). In many cases, these governance processes and tools already exist and simply may need to be formalized and enhanced. A similar, lightweight approach is described in Robert A. Seiner’s book Non-Invasive Data Governance.

With governance and organizational responsibilities established, a Managing maturity state is achieved, and data can begin to be managed as an asset. Data is cleaner, more detailed, and more accessible to the business users that need it, preparing the institution to move beyond basic regulatory and financial reporting.

This describes a process. There’s no way to implement across-the-board data governance and improved data quality all at once. Initial areas of focus—once governance is in place—include data definitions and quality standards for critical data elements in a select number of domains (banks subject to DFAST and CCAR stress-testing regulations know this well). This effort is then expanded to additional data elements and domains over time. Definitions for common metrics in use throughout the bank also will be established early in the analytics maturity life cycle. These initial efforts have the added benefit of documenting data sources and destinations.

With trustworthy, cross-domain data available, business intelligence-related skills needed to leverage it can be acquired and developed. These additional resources can be used to develop dashboards and visualizations that enable managers to grasp quickly the performance of their business units. Availability of this data becomes next day or even real time instead of waiting ten days after the month’s end. Success stories are great for securing the management buy-in needed to encourage use of data for decision-making, at which point the Leading maturity state is achieved.

Finally, the highest analytics maturity state in our model, Innovating, can occur once data and reporting move beyond tools for risk management. Organizations that have a data culture and are able to execute on it deploy scorecards, dashboards, and analytics that move beyond risk and are used to improve customer experience, improve efficiency, and generate revenue for the bank.

By proactively managing data as an asset, banks can unlock the power of data and tools for risk management purposes by augmenting them with additional data and modeling to generate insights that can be used to acquire, cross sell to, and retain customers. Data in these kinds of organizations is used to understand how customers “feel,” as Ray Davis, chairman of $25 billion-assets Umpqua Bank, has observed, and ultimately to understand how they actually behave and buy. Under Davis, Umpqua recently established a subsidiary, Pivotus Ventures, which among other things has been acquiring talent to further expand its capabilities in data science and advanced analytics.

Leveraging vendor portfolio management

Part of “getting” technology is understanding the possibilities it creates in executing on and enhancing the bank’s vision and strategies. Digital sales and service technology in particular continues to be one of the great strategic opportunities to improve customer experience. Banks that differentiate themselves via digital channels with solutions beyond those offered by their core solution providers provide an example of leveraging vendor portfolio management and related processes for the benefit of customer experience.

The vendor management office alone isn’t going to bring these solutions to market sooner, especially from relatively new providers. Business line stakeholders must be engaged, and the quantitative vendor management approach of the past, focused on reactive data collection and price negotiation, must evolve to a qualitative approach that emphasizes capability and service level, enabling proactive management of the bank’s vendor and solution portfolio. Cornerstone Advisors refers to this approach as vendor performance management.

Beyond legacy vendor management

Thinking about vendor management solely from cost, risk, and regulatory standpoints can stifle a bank’s ability to differentiate. This reactive, legacy approach may be effective in ensuring contracts don’t auto-renew, SOC 2 control reports are collected and reviewed, and vendor risk ratings are maintained, but it misses the boat on understanding how well bank vendor capabilities align with bank needs now and into the future.

The legacy approach to vendor management works for commoditized products and services, but presents a barrier to providing superior customer experience and internal efficiency. It misses the capabilities of the many nontraditional—a.k.a. fintech—options now available. Without collaboration among vendor management and business units, passively accepting what a vendor provides becomes the norm, resulting in underutilized capabilities and delivery experiences that pale versus customer experiences at other banks and especially at nonbanks, which have dramatically altered consumer expectations in recent years.

Applying vendor performance management

Banks with success in digital sales and service, as an example, typically incorporate an environment scan and establish vendor performance management stakeholder roles to measure effectiveness of existing solutions from their current portfolio of digital providers, identify additional solutions available from their current providers, and identify alternative providers including new market entrants.

Formally establishing stakeholder roles for vendor, application, and channel ownership within the bank ensures the right resources are engaged to participate in the qualitative analysis of existing and proposed solutions.

Ideally, all employees can contribute to the environment scan by sharing what they experience as consumers. However, a formal process of scanning the external environment helps stakeholders understand where additional capabilities may be needed by the bank and what providers and solutions can address those needs. The scan should cover industry, technology, delivery, and/or regulatory trends relevant to bank strategies.

Expanding vendor risk appetite

Nowadays, virtually every company—new or established—that provides a banking application calls itself a fintech company. Nevertheless, there are some considerations for banks that decide to work with relatively new fintechs. These start-ups aren’t going to have years of audited financial statements available and may not have dozens of peer institutions live on their solutions.

Banks have to make adjustments to their risk appetites and vendor due diligence requirements to accommodate these companies. Being an early adopter means the vendor management team can’t spend six months on due diligence pursuing items they’re not going to get.

This mind-set shift can be a difficult, but necessary step in organizations that have grown up with a risk-averse culture (i.e., most financial institutions).

Vendor risk is not the only risk appetite that must be adjusted. Forward-looking institutions also want to bring new features to customers, not all of which will be successful. Early adoption of new solutions and capabilities means getting comfortable and proficient with “test-and-learn” methodologies including customer beta testing and internal proof-of-concept efforts, enabling delivery of user feedback, rapid solution deployment, and iteration. The failure rate of these initiatives will be higher, and feedback must be continuously reviewed to determine if a given initiative requires further iteration, or should be killed.

Identifying and contracting with fintech providers is just a start. Banks that get technology have ensured they have an integration environment and skills availability (internal or external) that supports these emerging fintech companies as well as more established best-of-breed providers, ensuring they can chart their own course versus being reliant on large, incumbent core, delivery, and business application providers.

We have seen that when business units are engaged as partners with vendor management, vendor portfolio management serves not only as an effective tool for cost and risk management, but also as a means of maximizing the use of existing vendors and solutions to bring additional technology capabilities to the bank.

Engineering over energy

Banks that get technology ask the question Jeff Bezos of Amazon asked in his 2016 shareholder letter: “Do we own the process, or does the process own us?”

One recent trend in institutions pursuing a process improvement mind-set has been a focus on lending activities. For example, reengineering lending processes from an end-to-end perspective has created substantial benefits in our clients in areas including mortgage and consumer loan origination via digital channels, providing not only a superior customer experience during the application process, but an efficient underwriting process behind the scenes.

Another example is streamlined small business lending processes that better align underwriting requirements with the size/risk of the credit, reduce turnaround times, and improve credit administration efficiency.

Brute force and culture roadblocks

Banks that have had success in the areas above haven’t applied technology exclusively. Their approach is to use a collaborative, engineering mind-set to first look at the process. Without that, it’s tempting for them to throw bodies and then technology at manual processes to improve efficiency. The brute force of additional headcount can get the daily work out the door, but eventually can result in risk management issues from errors as volume increases.

By not tackling process automation from an end-to-end, customer-facing perspective before applying technology, we see banks inhibiting their ability to realize the full benefits of automation in several ways including these:

• Automating a poorly designed process can leave wasteful steps in place, resulting in continued inefficiency.

• Pursuing process improvement within a departmental silo reduces the ability to realize enterprise-wide versus tactical efficiency benefits.

• Viewing processes from an internal customer versus an external customer lens reduces the chances of building processes that benefit the customer experience as well as the bank.

Using the loan origination example above, it’s easy to envision a scenario where a well-intentioned effort to improve the customer experience can have the opposite effect. A bank could go through the effort to deploy a well-designed, easy-to-use digital loan application for its customers, but if it hasn’t thought through the process from application all the way to underwriting, documents, funding, and on into servicing, it could easily fail to meet customer expectations shaped by experiences with best-in-class providers within and outside of financial services. Poor communication, manual processes, unnecessary paper, and lengthy turnaround times will lead to the opposite of the expected customer experience via a digital channel without an end-to-end, customer-focused look at the process.

High-growth institutions also run the risk of outgrowing managers that don’t expand their perspective beyond the bank’s four walls. If managers aren’t proactively seeking interaction with peers, participating in an external scan process, or leveraging third-party consulting and research, banks will discover that for many processes, what worked in a $2 billion-assets institution won’t work in one approaching $10 billion.

Strategic data culture, described earlier, also plays a role in engineering sound process. Without the ability to accurately measure and report success, change and improvement become difficult to sustain. “We need a guy/gal” is a standard refrain in organizations where gut instinct versus facts drives decisions. But temporary gains from hiring a superstar will succumb to inefficiency if process measurement and improvement is not addressed.

Evolving into a process mind-set

In our strategic planning work, we see many bank leaders who have difficulty saying “no.” If they are left unchallenged, they will pursue every customer segment and try to execute on many more projects than their institutions can successfully complete.

Three things we see in banks that are successful with process optimization and effective application of technology are:

1. Establish a clear focus in the strategic planning process—including areas where the bank wants to differentiate (see above on saying “no”).

2. Use bank governance processes to prioritize the right investments—including those that will provide clear customer as well as internal efficiency benefits—and to define the “pivot points” in the customer experience.

3. Formalize product, channel, and process ownership and responsibilities—a repeat theme of this article—with all three viewed from the perspective of end-to-end customer experience versus process silos.

Enhanced data analytics, discussed earlier, assist in making better process decisions. Dashboards and other reporting tools provide information on key performance indicators related to products, channels, and processes. This reporting enables targeting of process optimization efforts.

Using the loan origination process example again, an institution may identify mortgage lending as one of its differentiators, focusing investment on improving mortgage origination processes and related systems, and deferring initiatives focused on lower-volume lending segments. Dashboards and scorecards can be used to identify areas of focus for process improvement efforts, such as areas of friction in the application process leading to abandonment, bottlenecks in the process leading to longer-than-desired turn times, and opportunities to improve data quality at point of capture, reducing errors.

Once the processes are defined, workflow technology, a primary tool used to enable end-to-end process automation, can be deployed for the benefit of both customer experience and internal efficiency.

This digital automation tool enables paperless, straight-through processing and can be applied to account-opening and loan-origination applications as well as delivery channel and imaging/content management solutions. Workflow automation reduces cycle time for processes, increases accuracy, and reduces friction of common customer activities.

Banks don’t have to be among the largest in size and go “full Six Sigma” to realize the benefits of process improvement. We have seen the Lean methodology, other widely deployed process improvement methodologies, and even home-grown approaches deployed in community banks as well as regional and larger banks in order to realize the process efficiency.

Clear direction on the bank vision and the areas of differentiation and a process mind-set enable institutions that get technology to apply it for maximum benefit of both external and internal customers.

Maximizing versus settling

Technology is fascinating, powerful, and can enable realization of substantial benefits to both customers and financial institutions, but success in deploying technology isn’t exclusively based on the technology itself. Instead, what matters just as much or more is the state of maturity of the processes that technology is applied to and the organizational structure and skill sets that are available in support of the technology.

Regardless of their size, when financial institutions establish a clear vision of where they wish to differentiate and commit appropriate capital and resources to the task, they can be successful in using technology to differentiate themselves from the competition.

About the author

Quintin Sykes is managing director of the Technology Solutions Practice at Cornerstone Advisors.

Tagged under Management, Lines of Business, Technology, Tech Management,

Related items

- How Banks Can Unlock Their Full Potential

- JP Morgan Drops Almost 5% After Disappointing Wall Street

- Banks Compromise NetZero Goals with Livestock Financing

- OakNorth’s Pre-Tax Profits Increase by 23% While Expanding Its Offering to The US

- Banking Exchange Interview: Soups Ranjan Founder and CEO of Sardine Discusses AI